DPC (DPP) Screening Methods for Group Lasso

Group Lasso is a widely used sparse modeling technique to identify important groups of variables.

For Group Lasso,the DPC screening rule is developed as an extension of the DPP method for standard Lasso.

DPC can be integrated with any existing solvers for Group Lasso. The code will be available soon. The implementation of the DPC rule is very easy.

Publications

Lasso Screening Rules via Dual Polytope Projection. (Improved version of the one accepted by NIPS 2013)

Jie Wang, Peter Wonka, and Jieping Ye.

Journal of Machine Learning Research, to appear.Lasso Screening Rules via Dual Polytope Projection.

Jie Wang, Jiayu Zhou, and Peter Wonka, Jieping Ye.

NIPS 2013.

Notice that, the Enhanced DPP (EDPP) rule to appear in JMLR is an improved version of the EDPP rule in the NIPS paper. They are NOT the same.

The EDPP rule in the NIPS paper refers to “Improvement 1” in the journal version. The EDPP rule introduced below refers to the one in the journal version.

Formulation of Group Lasso

Let  denote the

denote the  dimensional response vector and

dimensional response vector and ![{bf X} = [{bf x}_1, {bf x}_2, ldots, {bf x}_p]](eqs/1254447840-130.png) be the

be the  feature matrix. Let

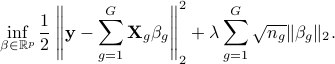

feature matrix. Let  be the regularization parameter. With the group information available, the Group Lasso problem is formulated as the following optimization problem:

be the regularization parameter. With the group information available, the Group Lasso problem is formulated as the following optimization problem:

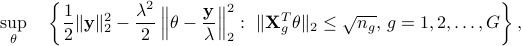

The dual problem of Lasso is

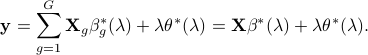

We denote the primal and dual optimal solutions of Lasso by  and

and  , respectively, which depend on the value of

, respectively, which depend on the value of  .

.  and

and  are related by

are related by

Moreover, it is easy to see that the dual optimal solution is the projection of  onto the dual feasible set, which is the intersection of a set of ellipsoids.

onto the dual feasible set, which is the intersection of a set of ellipsoids.

DPC (EDPP) rule for Group Lasso

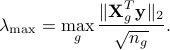

Let us define

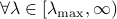

It is well-known that  , we have

, we have

In other words, the Group Lasso problem admits closed form solutions when  .

.

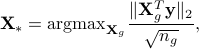

Let ![lambda_0 in (0,lambda_{rm max}]](eqs/1139229832-130.png) and

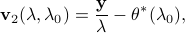

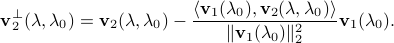

and ![lambda in (0,lambda_0]](eqs/1747055416-130.png) . We define

. We define

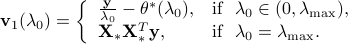

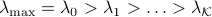

For the Group Lasso problem, suppose we are given a sequence of parameter values

. Then for any integer

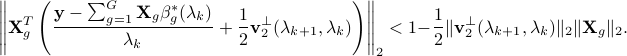

. Then for any integer  , we have

, we have ![[beta_g^*(lambda_{k+1})]_i=0](eqs/273653035-130.png) if

if  is known and the following holds:

is known and the following holds:

To start from

, it is worthwhile to note that

, it is worthwhile to note that  and

and  .

. At the

step, suppose that

step, suppose that  is known. To determine the zero coefficients of

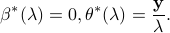

is known. To determine the zero coefficients of  , DPC (EDPP) needs to compute

, DPC (EDPP) needs to compute  , which depends on

, which depends on  and

and  . Thus, we need to compute

. Thus, we need to compute  . Indeed,

. Indeed,  can be computed by

can be computed by  .

.After DPC (EDPP) tells you the zero coefficients of

, the corresponding features can be removed from the optimization and you can apply your favourite solver to solve for the remaining coefficients of

, the corresponding features can be removed from the optimization and you can apply your favourite solver to solve for the remaining coefficients of  . Then, go to the next step until the Lasso problems at all given parameter values are solved.

. Then, go to the next step until the Lasso problems at all given parameter values are solved.